As we connect more and more wireless nodes to a basestation, with each wireless node transmitting every 10s or so we quickly start to have a situation where the basestation is trying to make several connections to the server every second.

The question is, does the rate of connections matter? Is it bad news for the emoncms server and what is the effect on the local internet connection, router?

Although I have not investigated this in detail, Im pretty sure Im seeing an adverse effect on my internet connection if the post rate gets too high, I noticed this especially the other day when I changed my router to a bt homehub after a lighting strike took out the old router. It would be great to get some good data on this.

Then there is the question of server load which needs more investigation.

Jumping to a solution before the problem is properly characterized, the solution that comes to mind is to queue packets received from wireless nodes in the basestation and then do a bulk post at a slower rate, such as once every 20s or longer.

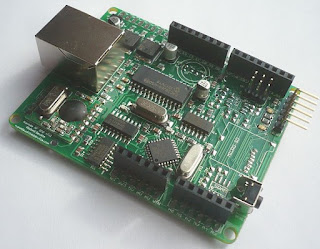

I have put together an initial working example of this, including code for both the NanodeRF basestation end and the emoncms server end.

Starting with the NanodeRF code:

https://github.com/openenergymonitor/NanodeRF/tree/master/NanodeRF_multinode_bulksend The NanodeRF code queues packets received, including a time offset between packets so that they can be stored in emoncms at the right time when they get there (although the implementation for this is a little rough at the moment).

The packets are queued in a string that can be sent via the ethernet and then decoded simply in emoncms using json_decode();

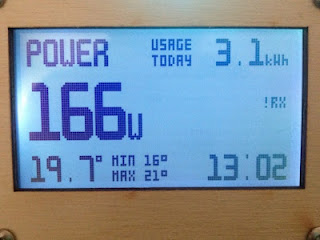

A string for 3 packets from the different nodes looks like this:

[[0,16,1137],[2,17,1437,3164],[4,19,1412,3077]]

The first node: [0,16,1137]

The first number of each node is the time offset, so for the first node it is 0 which means the packet for the first node arrived at 0 seconds. The second node arrived at 2 seconds and 3rd 4 seconds.

The second number is the node id, this is the unqiue identifer for the wireless node.

All the numbers after the first two are data values. The first node here (node 16) has only once data value: 1137.

This string is sent to emoncms every 20s in the example and there is enough room in the string buffer to store up to 400 characters or about 22 low power temperature node packets, this may be extendible by another 200 characters for more storage, and maybe a lot further with an SD card or other larger storage medium.

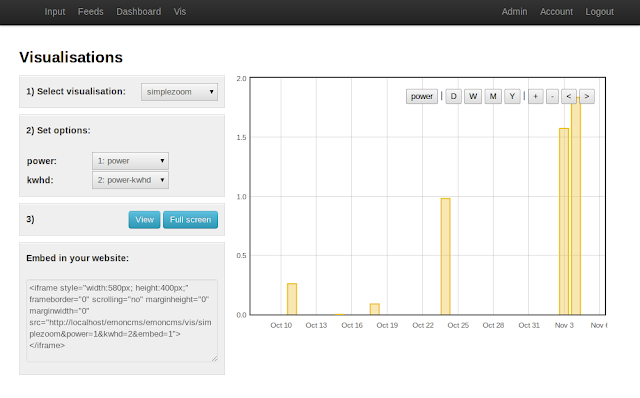

The emoncms api for sending bulk data is emoncms.org/input/bulk?data=

Emoncms then decodes the string, registers and processes the inputs and returns an ok.

If your interested in testing this out, here's the NanodeRF bluksend example again, if your using the NanodeRF_multinode code already this is just a straight switch to the bulk send code:

https://github.com/openenergymonitor/NanodeRF/tree/master/NanodeRF_multinode_bulksend If your running your own server you will need the latest emoncms version:

https://github.com/emoncms/emoncms or if you are using emoncms.org its all ready to go there.

There's a couple of question marks at the moment as to the stability of this initial working example, I had a few problems with the queue string getting malformed but only once every 20-30 successful string builds and at the moment the nanodeRF does not reset itself using the watchdog if there are more than 10 unsucessful attempts. A few extra eye's on the code would definetly help to improve it and I will keep testing here.