The

last post detailed an implementation for exporting feed data from emoncms, this post explores an implementation for importing feed data, which can be used in conjunction with the export implementation to sync or download feed data from one emoncms instance to another enabling full feed data portability.

I started by trying to create an import script that was build into a normal page action, you would go to a page ie:

sync/feed?id=10...The problem with this is that downloading and importing a feed takes a long time and so the page will appear unresponsive for that time, the loading animation just turns and turns.

If you mistakenly click on the refresh button, the initial process will not close and so you will start to get duplicate processes which will mess up the data and increase the server load.

Ideally while downloading and importing the data there would be a progress bar so that you can see what's happening and any other useful feedback if things go wrong.

To get this kind of feedback you need to be able to update the browser as the process is running.

You also want the syncing to happen directly between servers rather than server to local computer to server as this would be faster in instances where both servers are remote and the internet access to the local computer is relatively slow, - i.e you want the import process handled in php which is server side (you could use another server side language if you wanted).

The way to get feedback about what's happening while having the import process being handled in php is to have two separate scripts one that handles the importing and another that can be refreshed regularly serves the gui webpage.

We can extend the idea of a separate import script to sort the problem of duplicate processes too by implementing a download and import queue.

When a user clicks to download/sync a feed in the sync page, the action behind this enters the details of the feed to be downloaded in the queue, it can also check at this point that it does not already exist in the queue.

We can then run the import script independently of the gui page, such as via a scheduled cron job that runs the script one a minute. We can put a check in there to ensure that only one instance of the script is running at any given time too.

The import script then checks if there are feeds to be downloaded in the import queue, working through each queue item sequentially.

The added advantage of the queue implementation is that we can keep the load that importing places on the server low allowing only one feed to be downloaded and imported at any given time.

So all in all this implementation:

- Enables feedback on import progress.

- Ensures we don’t get duplicate feed syncing processes.

- Ensures that the server load is kept under control.

On to the code, here's the import script that works through the import queue and downloads and imports each item sequentially, I would be interested to hear if there are any ways the efficiency of the export and import process can be improved.

<?php

// Ensure only one instance of the script can run at any one time.

$fp = fopen("importlock", "w");

if (! flock($fp, LOCK_EX | LOCK_NB)) { echo "Already running\n"; die; }

// Connect to the database

define('EMONCMS_EXEC', 1);

require "Includes/process_settings.php";

require "Includes/db.php";

switch(db_connect()) {

case 0: break;

case 1: break;

case 3: show_dbsettingserror_message(); die ;

}

// Fetch the import queue

$result = db_query("SELECT * FROM importqueue ORDER BY `queid` Asc");

// For each item in the queue

while($row = db_fetch_array($result))

{

$queid = $row['queid'];

$feedname = "feed_".trim($row['localfeedid'])."";

// Check if we have already downloaded part of the feed and get the last

// value entered so that we dont download and insert data that has already

// been inserted this makes this utility useful for syncing in general

// and in particlar backup that only downloads the latest changes.

$feed_result = db_query("SELECT * FROM $feedname ORDER BY time Desc LIMIT 1");

$feed_row = db_fetch_array($feed_result);

$start = 0; if ($feed_row[0]) $start = $feed_row[0];

// Open the file served from the export page on the remote server

$url = $row['baseurl'].'/feed/export?apikey='.$row['apikey'].'&id='

.$row['feedid'].'&start='.$start;

echo "Opening file $url\n";

$fh = @fopen( $url, 'r' );

// Read through the file

$i = 0;

while (($data = fgetcsv($fh, 0, ",")) !== FALSE)

{

$feedtime = $data[0]; $value = $data[1];

if ($feedtime!='' && $value!='')

{

$i++;

//Contruct values part of the query

if ($i!=1) $vals .= ',';

$vals .= "('$feedtime','$value')";

// Execute query every 400 rows (same block size as export script)

if ($i>400)

{

$i = 0;

if ($vals) db_query("INSERT INTO $feedname (`time`,`data`) VALUES ".$vals);

$vals = "";

}

}

}

// If there are lines to be inserted left over insert them here at the end

if ($vals) db_query("INSERT INTO $feedname (`time`,`data`) VALUES ".$vals);

fclose($fh);

echo "Transfer complete\n";

echo "Deleting item $queid from queue\n";

db_query("DELETE FROM importqueue WHERE queid = $queid");

}

?>

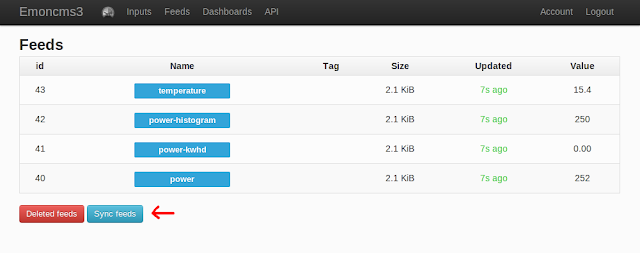

In the next post I will explain the interface for generating the feed import queue.