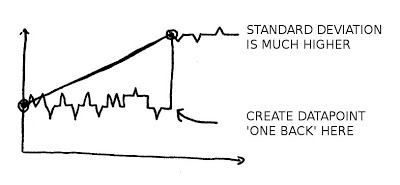

Removing redundant datapoints – algorithm 1

Removing redundant datapoints - part 1

- Can a good enough algorithm be developed to compress the data while retaining the detail we want.

- What are the implications for data query speeds?

Timestore timeseries database

http://www.mike-stirling.com/redmine/projects/timestore

Timestore is a lightweight time-series database developed by Mike Stirling. It uses a NoSQL approach to store an arbitrary number of time points without an index.

Query speeds

Timestore is fast, here's the figures given by Mike Stirling on the documentation page:

From the resulting data set containing 1M points spanning about 1 year on 30 second intervals:

Retrieve 100 points from the first hour: 2.6 ms

Retrieve 1000 points from the first hour (duplicates inserted automatically): 6.2 ms

Retrieve 100 points over the entire dataset (about a year worth): 2.5 ms

Retrieve 1000 points over the entire dataset: 7.0 ms

Disk use

Timestore uses a double as a default data type which is 8 bytes. The current emoncms mysql database stores data values as floats which take up 4 bytes, its easy to change the data type in timestore so for a fair comparison we can change the default datatype to a 4-byte float:

Layer 1: 10s layer = 3153600 datapoints x 4 bytes = 12614400 bytes

Layer 2: 60 layer1 datapoints averaged = 52560 datapoints x 4 bytes = 210240 Bytes

Layer 3: 10 layer2 datapoints averaged = 5256 datapoints x 4 bytes = 21024 bytes

Layer 4: 6 layer3 datapoints averaged = 876 datapoints x 4 bytes = 7008 bytes

Layer 5: 6 layer4 datapoints averaged = 146 datapoints x 4 bytes = 1168 bytes

Layer 6: 4 layer5 datapoints averaged = 36 datapoints x 4 bytes = 288 bytes

Layer 7: 7 layer6 datapoints averaged = 5 datapoints x 4 bytes = 40 bytes

total size = 12854168 Bytes or 12.26Mb

The current emoncms data storage implementation uses 60Mb to hold the same data as it saves both the timestamp and an associated index. Timestore therefore has the potential to reduce diskuse by 80% for realtime data feeds.

Interestingly all the downsampled layers created by timestore only come too 0.23 Mb. Before doing the calculation above I used to think that adding all the downsampled layers would add to the problem of disk space significantly but evidently it a very small contribution compared with the full resolution data layer.

Emoncms timestore development branch

I made a start on integrating timestore in emoncms, there's still a lot to do to make it fully functional but it works as a demo for now, here's how to get it setup:

1) Download, make and start timestore

$ git clone http://mikestirling.co.uk/git/timestore.git

$ cd timestore

$ make

$ cd src

$ sudo ./timestore -d

Fetch the admin key

$ cd /var/lib/timestore

$ nano adminkey.txt

copy the admin key which looks something like this: POpP)@H=1[#MJYX<(i{YZ.0/Ni.5,g~<

the admin key is generated anew every time timestore is restarted.

2) Download and setup the emoncms timestore branch

Download copy of the timestore development branch

$ git clone -b timestore https://github.com/emoncms/emoncms.git timestore

Create a mysql database for emoncms and enter database settings into settings.php.

Add a line to settings.php with the timestore adminkey:

$timestore_adminkey = "POpP)@H=1[#MJYX<(i{YZ.0/Ni.5,g~<";

Create a user and login

The development branch currently only implements timestore for realtime data and the feed/data api is restricted to timestore data only which means that daily data does not work. The use of timestore for daily data needs to be implemented.

The feed model methods implemented to use timestore so far are create, insert_data and get_data.

Try it out

Navigate to the feeds tab, click on feed API helper, create a new feed by typing:

http://localhost/timestore/feed/create.json?name=power&type=1

It should return {"success":true,"feedid":1}

Navigate back to feeds, you should now see your power feed in the list.

Navigate again to the api helper to fetch the insert data api url

Call the insert data api a few times over say a minute (so that we have at least 6 datapoints - one every 10 seconds). Vary the value to make it more interesting:

http://localhost/timestore/feed/insert.json?id=1&value=100.0

Select the rawdata visualisation from the vis menu

http://localhost/timestore/vis/rawdata&feedid=1

zoom to the last couple of minutes to see the data.

I met Mike Stirling a little over a month ago in Chester for a beer and a chat after Mike originally got in contact to let me know about timestore. We discussed data storage, secure authentication, low cost temperature sensing and openTRV the project Mike is working on. I think there could be great benefit to work on making what we're developing here with openenergymonitor interoperable with what Mike and others are developing with openTRV, especially as we develop more building heating and building fabric performance monitoring tools. This could all develop into a super nice open source whole building energy (both electric and heat) monitoring and control ecosystem of hardware and software tools.

Check out Mike's blog here:

http://www.mike-stirling.com/

and http://www.earth.org.uk/open-source-programmable-thermostatic-radiator-valve.html

The current emoncms feed storage implementation

Lets say we want to store a year of 10s data. There are 31536000 seconds in a year and so 3153600 datapoints at a 10s data rate.

A single datapoint is made up of a timestamp which is stored as an unsigned integer, which takes up 4 bytes, and a float data value which also takes up 4 bytes.

3153600 datapoints x 8 bytes per datapoint (table row) = 24 Mb

In addition to the feed data we also have a table index which speeds up queries considerably. The worst case index size can be estimated with the equation detailed on this page:

http://dev.mysql.com/doc/refman/5.0/en/key-space.html

index row size = (key_length+4) / 0.67

The key we are using is the time field which is 4 bytes and so the index row size is = (4 + 4) / 0.67 =~ 12 bytes

The index size for 3153600 datapoints is therefore approximately = 3153600 * (4 + 4) / 0.67 = 36Mb

The total feed table size will therefore be approximately 60Mb.

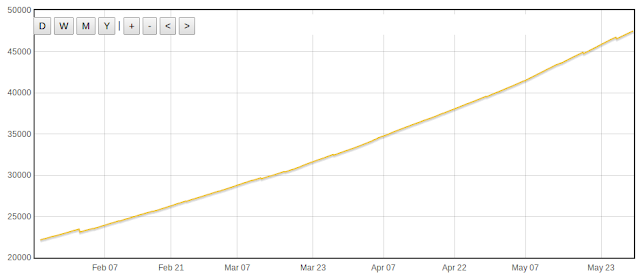

Emoncms.org load stats

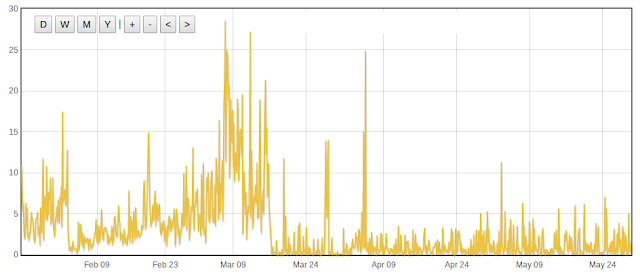

This graph shows the number of feeds that where updated sometime in the last 5 minutes (not the number of feed updates in the last 5 mins which is much higher). I use this graph to check that an emoncms update has not caused a big drop off in active feeds, I check to make sure that the number of active feeds returns to the same level after an update.

The number of active feeds has grown from around 1350 to 2100 (750) since early March 2013, just over 300 new active feeds a month. The total number of feeds created in all time is 15660 a portion of these will have been deleted and replaced with new feeds, a portion will just be inactive.

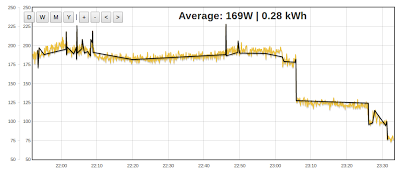

Zooming in again on the last 4 hours shows that there are about 120 feeds that are updated on a longer than 5 min timescale and there doesn't seem to be a clear correlation between the server load spikes above and the update spikes here. Maybe some kind of mechanism to even out the load could be a beneficial feature to look into.

Recent commits to emoncms

There have been many great recent commits to emoncms thanks to PlaneteDomo, Baptiste Gaultier, Simon Stamm, Erik Karlsson (IntoMethod), Bryan Mayland (CapnBry), Ildefonso Martínez, Paul Reed and Jerome. Including improved translations, ability to translate javascript, query speed-ups, a working remember me implementation and work on the raspberry pi module. I thought Id write this blog post to draw attention to the great contributions that are being made and so that credit goes where its due:

Summary of additions:

PlaneteDomo - Implementation of a clean way of adding ability to translate text previously defined in javascript https://github.com/emoncms/emoncms/pull/72

Baptiste Gaultier (bgaultier) - A lot of French translation work

Simon Stamm - Added ability to display yen and euro in zoom visualisation, including an option to place the currency after the value ( 1 = after value, 0 = before value)

https://github.com/simonstamm/emoncms/commit/39af426ecd9eabffefbc12712bfea9ed2503a5f5

and fixed issue with floatval and json_decode: https://github.com/emoncms/emoncms/pull/78

Erik Karlsson (IntoMethod) - Fixed dashboard height issue, thanks to Paul Reed for reporting this bug on the forums: http://openenergymonitor.org/emon/node/2013

Addition of async ajax calls for some visualisations https://github.com/emoncms/emoncms/pull/71 this makes the dashboard feel alot snappier and page load is about 4-5 times faster.

Also a really significant fix that I've been really enjoying, Erik Karlsson fixed the remember me implementation that I failed to get to work properly: https://github.com/emoncms/emoncms/pull/69

Bryan Mayland (CapnBry) - Improved feed/data request query times: https://github.com/emoncms/emoncms/pull/63 adds a 3rd query type using the mysql average method for times less than 50 hours (180,000 seconds).

Ildefonso Martínez (ildemartinez) - javascript code re-factoring

Paul Reed - tab between fields when logging in, average field in visualisations moved to the right.

Jerome (Jerome-github) A lot of work on the RaspberryPI emoncms module including continued work on the python gateway script. For ongoing discussion on raspberrypi module development see the github issues page here: https://github.com/emoncms/raspberrypi/issues/30

Id really like to thank these guys and everyone who continues to help out with development, there's a lot of hard work going in that's really pushing things forward.

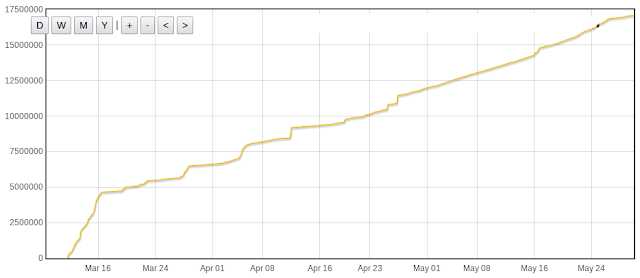

Emoncms.org backup

I'm happy to announce that emoncms.org is fully backed up and has incremental backup implemented, all data is incrementally backed up once every 24 hours, a backup cron job runs hourly syncing 640 feeds each time so 15360 feeds every 24 hours. emoncms.org disk use is currently growing at a rate of about 300MB a day and the transfer format is csv which gives you an idea of the volume of data that the backup implementation needs to sync. The total volume of data I have synced so far using this is 49 GB.

The backup implementation uses many of the things already developed as part of the sync module which allows you to download feed data from a remote server. I've put the full emoncms backup script in the tools repository on github here:

https://github.com/emoncms/usefulscripts/blob/master/backupemoncms.php

For the above script to run, you need to first copy the users and feeds table from the master server to the backup server using the more common backup procedure of using mysqldump and scp, the steps to do this are described in the header comments of the backup script.

This method of backing up is much faster than using rsync which I originally tried for incremental backup as it does not go through each feed looking for changes it just checks when was the last datapoint in the backed up feed and downloads every new datapoint from the master server recorded after that time, one disadvantage of this is that any changes to feed data using the datapoint editor tools in emoncms will not get updated to the backup server. It would be good though to make it possible to delete data on the backup server if its deleted off the master server, as disk space is expensive and if you delete data off emoncms.org you would expect no copy to remain from a data privacy point of view.

I implemented the backup system like this because I had most of what I needed already in place in the sync module and so it was the quickest way for me to get this up and running using what I already knew. I'm aware the database replication can be performed with mysql replication, where a transaction log is stored on the master server and transferred to and then executed on the backup server. I'm interested in exploring this option too and if anyone can tell me that using mysql replication will offer significant performance benefits over the method above and why, that would certainly motivate me to look at it sooner.

I'm still reluctant to guarantee data on emoncms.org as both vm servers are in the same datacenter and they are part of bigv cloud which could even mean that both share the same disk (which would invalidate one of the reasons for a separate backup to protect against disk failure) although bigv suggested that this is unlikely as there are plenty of tails. They recognise this as a weakness and something they hope to change soon.

So if you want extra peace of mind I suggest installing a local installation of emoncms on your own computer and downloading your data periodically using the sync module, I do this both for extra backup and so that I can access the raw data for trying new visualisation and data analysis, processing ideas. I will write a guide on how to do this soon. The sync module is available here:

http://github.com/emoncms/sync

I'm interested in being transparent about how emoncms.org is hosted, so that rather than give opaque promises you can asses things like how its backed up for yourself. You often here people say that no system is absolutely secure and completely safe from failure so I hope that by being transparent about this you can see what has been done. I'm relatively new to administering web services and I'm sure if your a more experienced web admin reading this you may know how this can be done better, I would appreciate hearing how you think it could be improved.

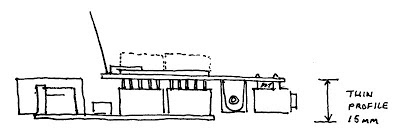

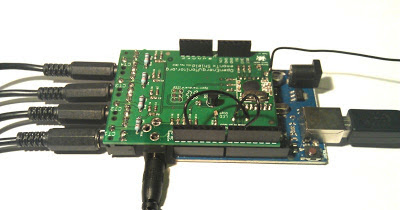

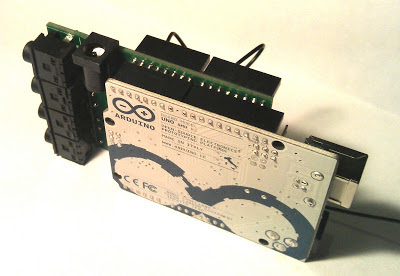

12 input pulse counter idea

- 12-input pulse counter

- Optional pull down resistor with option for SMT or through hole, see building blocks pages linked above for why pull down resistors are required.

- Input status LED, driven by pulse signal.

- Dedicated ATmega for pulse counting

- Serial connection to second ATmega used for ethernet or/and rfm12 comms.

- Enclosed in a DIN rail mounted enclosure.