I've just created a tutorial that covers the basics of creating a simple custom display module for emoncms that shows a readout of current home power use and how many kwh's have been used today.

The tutorial is up on github here:

https://github.com/emoncms/development/blob/master/Modules/myelectric_tutorial/readme.md

What should I expand on in the emoncms module building tutorial next?

Developing for Arduino remotely on a Raspberry Pi

I found myself tonight requiring the ability to develop for Arduino remotely on a Raspberry Pi. The use case is connecting an Arduino Uno to a Raspberry Pi to receive data from my Bee Hive Temperature Monitor setup. The Arduino posts data received from the Bee Monitor as a serial string onto ttyUSB0, EmoncmsPythonLink script is used to post the data to the emoncms server running locally on the Raspberry Pi with the file system on an external hard drive. The Pi also has an RFM12Pi fitted receiving data from an emonTx and a couple of emonTH's

I wanted to be able to access the Arduino, and upload a new sketch to fix bugs etc remotely since I will not always have local access to the system.

I came across a great Arduino command line build environment called inotool

http://inotool.org/

$ sudo apt-get update

$ sudo apt-get install arduino

$ sudo apt-get install python-dev&&python-setuptools

$ git clone git://github.com/amperka/ino.git

$ cd ino

$ sudo python setup.py install

if you want to use ino's built in serial terminal

$ sudo apt-get install picocom

I wanted to be able to access the Arduino, and upload a new sketch to fix bugs etc remotely since I will not always have local access to the system.

I came across a great Arduino command line build environment called inotool

http://inotool.org/

$ sudo apt-get update

$ sudo apt-get install arduino

$ sudo apt-get install python-dev&&python-setuptools

$ git clone git://github.com/amperka/ino.git

$ cd ino

$ sudo python setup.py install

$ sudo apt-get install picocom

to exit picocom serial [CTRL + A] followed by [CTRL + X]

$ ino init -t blink # initiates a project using "blink" as a template, copy libraries into

$ ino build # compiles the sketch, creating .hex file (default Arduino uno -m atmega328 for duemilanove)

$ ino upload # uploads the .hex file

The setup works well, opening the port on the router I can now ssh into the Pi and upload new sketches to the Arduino from anywhere on the web,

Emoncms control developments and demo's

First Happy Christmas everyone for yesterday!

One of the things I enjoy about the Christmas period is that you get some nice time to relax and with relaxation comes ideas and energy to try out some new things.

Glyn and I had been talking about control interfaces that could work well on a mobile or tablet and Id been working on a touch enabled dial earlier this month so I thought Id package it up as a emoncms module that sets the control variables in the packetgen module, I've also created a couple of simpler control interface examples using buttons and edit boxes. To try out the following demo's for yourself see the steps at the bottom of the post.

One of the things I enjoy about the Christmas period is that you get some nice time to relax and with relaxation comes ideas and energy to try out some new things.

Glyn and I had been talking about control interfaces that could work well on a mobile or tablet and Id been working on a touch enabled dial earlier this month so I thought Id package it up as a emoncms module that sets the control variables in the packetgen module, I've also created a couple of simpler control interface examples using buttons and edit boxes. To try out the following demo's for yourself see the steps at the bottom of the post.

Example 1: Heating On/Off

A simple example using a bootstrap styled html button as our heating on/off button. Clicking the button toggles the state and updates the “packetgen” control packet with the new state.

Example 2: Heating On/Off plus set point

Very similar to the first example but now with a text input for setting the set point temperature for the room/building. The microcontroller on the control node checks the room temperature and turns on and off the heater depending on the setpoint setting it recieves from emoncms.

Example 3: Nest inspired dial

A nest inspired dial interface for setting the setpoint temperature. The set point can be adjusted by spinning the dial wheel with your finger:

The dial and page resizes to fit different screen types from widescreen laptop's to mobile phones and tablets and touch can be used to spin the dial.

Solar PV Generation

Not really control but developing the idea of custom html/javascript canvas based graphics further.I've always liked the standard “news & weather” app that came with my android phone. It's a good example of how clean an intuitive graphs can be and how touch can be used to browse temperature/humidity at different times of the day.

This example is a start on creating a graph that looks and behaves in much the same way implemented in canvas.

What you will need to run these examples

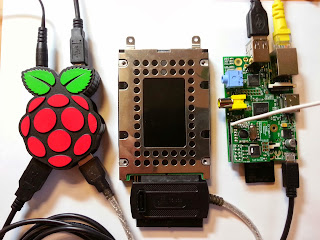

You will need your own installation of emoncms to try these out, such as an emoncms + raspberrypi + harddrive setup.RaspberryPI with harddrive

You will also need the packetgen module (installed as default with the harddrive image):

http://github.com/emoncms/packetgen

and the 'development' repository

http://github.com/emoncms/development

Copy the folder titled myheating from the development repository to your emoncms/Modules folder. (from development/Modules/control)

You should now be able to load the examples by clicking on the My Heating menu item.

The example does not yet allow for any gui based configuration and so assumes that the control packet structure looks like this:

This packet will work with the Arduino electricradiator control node example that can be found here:

Arduino Examples / electricradiator

This sketch could be run on an emontx with jeeplug relay's attached or other rfm12b + arduino + relay type hardware builds.

Coding your own control interface

The code for the simple button example above which may be useful as a foundation for building something else can be found here:

https://github.com/emoncms/development/blob/master/Modules/control/myheating/button.php

The interface is all in html and javascript jquery and uses bootstrap style buttons it just sends the updated heating state to the packetgen module via ajax.

More posts on controlling things

Glyn's post on controlling his boiler: http://openenergymonitor.blogspot.co.uk/2013/12/emoncms-early-heating-control-demo.html

The packet generator: http://openenergymonitor.blogspot.co.uk/2013/11/adding-control-to-emoncms-rfm12b-packet.html

Emoncms (early) Heating Control Demo

I've just hooked up a relay to control my central heating boiler and am successfully controlling it via the new emoncms packet generator module...it feels like the future has arrived in my house :-)

The next step will be to make a nice user facing interface which allows setting heating control time schedules and pulling in temperature data from emonTH and even making it smart by using energy data to try and detect if someone is home or not. Lots of exciting possibilities now the enabling hardware is in place.

Please excuse the old boiler, yes I know it should be a no brainer to replace it with a modern efficient condensing unit however things are never simple in rented accommodation. In practice I hardly use the boiler since bottled LPG is very expensive (no mains gas available here), luckily I have a wood stove and a good supply of wood!

Here's a demo of the heating boiler being turned on remotely:

Raspberry Pi with RFM12Pi expansion module running emoncms (on external HDD file system): see blog post. The Raspberry Pi and emoncms are also used to log my home power consumption from my emonTx V3, and room temperatures and humidity from my emonTH's. It also mirrors the data to a remote emoncms server for backup.

Control Node:

If switching mains voltage is involved only undertake tasks you are comfortable with, making sure everything is isolated before starting work and don't take any chances.

Heating systems vary widely, as does the type of control available. Some heating boilers can be controlled via an RF protocol, this could be preferable since tapping into the boiler direct and switching high voltage would not be required.

My old Worcester Bosch 240 with Honeywell controller requires connecting two terminals together to switch the heating on (see image below). I managed to wire in an additional control wire while keeping the original timer unit in place.

I used an emonTx V2 with JeeLabs relay module, this is just what I had to hand, any RFM12B node with an appropriate relay module could be used. We're looking into making a prototype PCB with an RFM12B, relay unit, on-board power supply and in-line energy monitoring (why not?!). The important consideration here is to make sure everything is properly enclosed and wired up to a high standard if you're controlling mains voltage. DON'T TAKE CHANCES WITH MAINS VOLTAGE.

It's important that the relay module used can handle the current being switched in my case this was only about 500mA @ 240V AC but if you're controlling an electric heating direct this is an important consideration.

emoncms running on Raspberry Pi with the new packet generator module, see blog post. This is included in the emoncms Raspberry Pi external HDD image (it's still in beta). For the moment as shown in the video the relay is switched on and off from the emoncms backend web page by browsing to the Pi's local IP address and logging into emoncms and selecting the packet gen module under the extras menu.

To enable control from outside the house the the http port would have to be opened on my router's firewall and a dynamic dns setup on the external IP address. As mentioned at the beginning of this post the next stage is to design a user facing interface dashboard for emoncms control modules.

Arduino Firmware:

I've pushed the Arduino sketch running on the emonTx to the emoncms packetgen module GitHub as an example.

Fail safe

I choose a non latching relay so in case of control unit power failure the relay will default to the open / off position.

I've tried to make the code on the relay control unit as fail safe as possible (independent of Raspberry Pi and network) and as boiler friendly as possible by including a fail safe time after which the relay will default to off (1hr - I'm a frugal user of heating), and a maximum switching rate of 5 min to stop the boiler repeatedly being switched on and off should something go wrong. A hardware watchdog on the ATmega328 was included to try and ensure the microprocessor never locks up.

The next step will be to make a nice user facing interface which allows setting heating control time schedules and pulling in temperature data from emonTH and even making it smart by using energy data to try and detect if someone is home or not. Lots of exciting possibilities now the enabling hardware is in place.

|

| Gas Combi Boiler Relay Control Unit top left |

Here's a demo of the heating boiler being turned on remotely:

Hardware:

Webserver:Raspberry Pi with RFM12Pi expansion module running emoncms (on external HDD file system): see blog post. The Raspberry Pi and emoncms are also used to log my home power consumption from my emonTx V3, and room temperatures and humidity from my emonTH's. It also mirrors the data to a remote emoncms server for backup.

|

| Raspberry Pi with RFM12Pi module with emoncms running locally on HDD file system |

Control Node:

If switching mains voltage is involved only undertake tasks you are comfortable with, making sure everything is isolated before starting work and don't take any chances.

Heating systems vary widely, as does the type of control available. Some heating boilers can be controlled via an RF protocol, this could be preferable since tapping into the boiler direct and switching high voltage would not be required.

My old Worcester Bosch 240 with Honeywell controller requires connecting two terminals together to switch the heating on (see image below). I managed to wire in an additional control wire while keeping the original timer unit in place.

I used an emonTx V2 with JeeLabs relay module, this is just what I had to hand, any RFM12B node with an appropriate relay module could be used. We're looking into making a prototype PCB with an RFM12B, relay unit, on-board power supply and in-line energy monitoring (why not?!). The important consideration here is to make sure everything is properly enclosed and wired up to a high standard if you're controlling mains voltage. DON'T TAKE CHANCES WITH MAINS VOLTAGE.

It's important that the relay module used can handle the current being switched in my case this was only about 500mA @ 240V AC but if you're controlling an electric heating direct this is an important consideration.

|

| Old Honeywell timer controller unit with front face removed - connect terminal 5 to 3 to turn heating on - black wire goes to relay (caution 240V AC) |

|

| emonTx V2 with RFM12B and JeeLabs relay module |

Software:

Emoncms Raspberry Pi Serveremoncms running on Raspberry Pi with the new packet generator module, see blog post. This is included in the emoncms Raspberry Pi external HDD image (it's still in beta). For the moment as shown in the video the relay is switched on and off from the emoncms backend web page by browsing to the Pi's local IP address and logging into emoncms and selecting the packet gen module under the extras menu.

|

| emoncms RFM12B control packet generator module - beta |

To enable control from outside the house the the http port would have to be opened on my router's firewall and a dynamic dns setup on the external IP address. As mentioned at the beginning of this post the next stage is to design a user facing interface dashboard for emoncms control modules.

Arduino Firmware:

I've pushed the Arduino sketch running on the emonTx to the emoncms packetgen module GitHub as an example.

Fail safe

I choose a non latching relay so in case of control unit power failure the relay will default to the open / off position.

I've tried to make the code on the relay control unit as fail safe as possible (independent of Raspberry Pi and network) and as boiler friendly as possible by including a fail safe time after which the relay will default to off (1hr - I'm a frugal user of heating), and a maximum switching rate of 5 min to stop the boiler repeatedly being switched on and off should something go wrong. A hardware watchdog on the ATmega328 was included to try and ensure the microprocessor never locks up.

emonTx Family Tree

Now that the new emonTx V3 has been launched in the shop, all pre-orders have been shipped and documentation for the new units is slowly coming together this feels like a good time to take stock of where we've come from in the last couple of years.

It's been interesting this evening to look back through old photos. Here's a few photos of us etching our first emonTx PCB ourselves using a laser printed transfer and horrible acid, not recommended!

| Etching the first emonTx V0.1 in Jan 2011 |

| First emonTx V0.1 prototype in Jan 2011 |

Needless to say they results were not great. Still, a fun experience.

|

| emonTx V1 - May 2011 |

|

| emonTx V2 - March 2012 |

The emonTx V2.2 design which has been the backbone of the project for the last year and a half, in that time we've sold almost 5000 of these! They have been shipped all over the word from Hawaii to Korea and most places in between!

|

| emonTx SMT prototype V? - Aug 2012 |

In the summer of 2012 we worked on an emonTx SMT prototype, we threw all bells and whistles into this basing the design on an Atmel energy monitoring app note. This design was based on the ATmega32U4 and would be very accurate with adjustable gain pre-amps on the CT channels. However after building a first prototype (which worked!) we realised that the price of this unit would probably be more than most would be willing to part with for an energy monitor. Keeping in mind that a web-connected base station and LCD display would probably also be desired for a full monitoring system. We also realised that for our first intro into SMT manufacture this design with it's very extensive BOM was perhaps a little ambitious.

The first emonTx SMT design was shelved (at least for the time being) and we put our efforts into designing a simpler more streamline unit with performance on par with the emonTx V2 which is good enough for most energy monitoring applications. Here was born the emonTx V3:

The first emonTx SMT design was shelved (at least for the time being) and we put our efforts into designing a simpler more streamline unit with performance on par with the emonTx V2 which is good enough for most energy monitoring applications. Here was born the emonTx V3:

| emonTx V3 - Dec 2013 |

The emonTx V3 was designed to be easily SMT manufactured, fit nicely into a tidy wall mountable enclosure, be flexible in terms of RF and MCU and have some useful additional features such as the ability to power the unit from a single AC power adapter which also provides an AC signal for Real Power and Irms calculations.

|

| emonTx V3 SMT pre-assembled |

| The finished emonTx V3 unit |

|

| Feeling like a proud parent as the first emonTx V3 is shipped...while also rocking a Movember! |

We're proud of where we have got to, however (as always!) there is still much work to do. Hardware wise we plan to make the emonGLCD pre-assembled SMT and (hopefully) reduce its cost in 2014 and also dip our toes into control of appliances as well as making system setup as easy as possible.

Thanks for your continued support along this journey. We would not have achieved half as has much if we were not standing on the shoulders of many other open-source projects and community contributions.

emonTx V3 AC Adapter Socket Error

Just quick post to highlight a small bug in the first batch of emonTx V3...there had to be something!

The barrel-jack socket on the first batch of emonTx V3's has got a middle pin with a diameter of 2.5mm. Our adapters don't fit since they are 2.1mm..Doh!

To solve this we're shipping a little adapter unit with each emonTx V3:

The AC-AC adapter is important and very useful on the emonTx V3 since it can power the unit as well as simultaneously providing an AC voltage sample for IRMS and Real Power readings.

It's been a busy day of order fulfilment today, all emonTx V3, emonTH and emonTx Shield SMT pre-orders will be shipped by tomorrow. Sorry for the slight delay, waiting for these adapter units to arrive was the cause for the delay. Hopefully the orders should all arrive at the destinations before Christmas.

Onwards! We're working hard to keep shipping out right up until Christmas. See my last post regarding last postage days for guaranteed delivery before Christmas.

Setup Emoncms on a RaspberryPI with a Hard drive

After the SD Card write issue setback I've been keen to get an alternative that works for running emoncms locally on a raspberrypi. I now have my home monitoring setup running off a raspberrypi with a hard drive (as recommended by Paul Reed, thanks Paul!) and a pihub as in the picture above and I've uploaded an image and details of this option to the emoncms.org documentation here:

http://emoncms.org/site/docs/raspberrypihdd

I think there are quite a few benefits to running emoncms locally especially as we start to add the ability to control things from emoncms in addition to monitoring. Being able to set a heating profile shouldn’t depend on a working internet connection or a remote server's uptime. Control needs to be as robust and secure as possible.

I also think there is an important benefit of improved privacy: Energy, temperature and other environmental data is often sensitive personal data. Storing data locally is probably the best way to ensure you have full control over the privacy of your data as it never needs to leave the home unless that is you decide to share certain feeds for an open dataset or enable remote access for access while away or on the move.

Another benefit that I make regular use of is to develop new features such as the recent open source building energy modelling work (see application guide), because my data and the application was local I could edit the visualisations and model and see the results on my data straight away.

The emoncms raspberrypi hard drive image comes with quite a few add-on emoncms modules installed out of the box including the Event and MQTT module by Nick Boyle (elyobelyob). I tested the NMA notify my android feature and it worked great.

Here are all the add-on modules installed out of the box:

- PacketGen, A new module for sending control packets.

- Event module for sending Prowl, NMA, Curl, Twitter and Email notifications.

- MQTT module for subscribing to MQTT topics containing data to be logged to emoncms.

- Notify module sends an email if feeds become inactive

- Energy module. Create a David MacKay style energy stack

- OpenBEM module. Open Source Building Energy Model. Investigate the thermal performance of buildings.

- Report module. Create's electricity use reports including appliance list exercise

The boot.img goes on the SD card and just tells the PI to use the filesystem that is on the external harddrive. The second image pi_hdd_stack.img needs to be written to the hard drive.

With the harddrive connected to the PI through a powered hub and the boot SD card inserted in the PI, the pi should just boot-up and and be ready to use.

Environmental Considerations

Adding a HDD to a Raspberry Pi setup does increase power consumption however I measured that my 2.5" 1TB USB external hard drive connected to the Pi only added 1.8W to the over all setup (3.9W). This modest increase in power can probably be justified.Obviously purchasing a HDD has a considerable embodied energy, however there is an excess of low capacity second hand IDE hard drives commonly sold on ebay which are perfect for this application. A 20Gb drive (masses of space for emoncms!) can be picked up for a few £. With regular backup should provide a few years of service. It's great to be able to make use of something which otherwise would have to thrown away or at best recycled for raw materials.

Adding control to emoncms: RFM12b Packet Generator

I've had many conversations with people recently about controlling things like radiator set points, boiler thermostat's, heatpump's and so on.

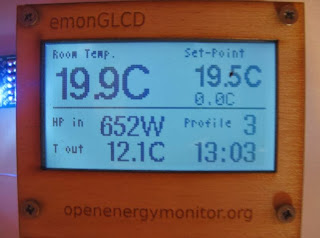

I visited John cantor of http://heatpumps.co.uk/ today who is implementing an impressive control and monitoring system for a heatpump central heating system which involves EmonGLCD's with relay's connected to radiator valve actuators in each room. The buttons on the EmonGLCD are used to set the target temperature for the room and information is displayed on the EmonGLCD about heatpump power input and outside temperature.

On the way back I stopped at the Centre for Alternative Technology where Marnoch who is on their student placement there is working on doing something similar but for a radiator system connected to a wood chip boiler.

Both Glyn and Ynyr are keen to get boiler thermostat controllers working in their houses and Ken Boak who designed the NanodeRF phoned up the other day suggesting we start an openenergymonitor sub project and discussion on this topic, Ken's been working on central heating control for quite some time.

Its something I have a growing interest in too as we will be soon installing a heatpump system at home which has a lot of opportunity for control and monitoring to make sure its running efficiently.

So I've been giving some thought to how control features could be added to emoncms in a more integrated way than initial efforts I've made before.

I like the simplicity of the way Jean Claude Wippler designed the jeelib library to use c structures as the method in which data is packaged and sent via the RFM12b radio's and so I thought that maybe a good basis for adding control functionality to emoncms would be to first make it possible for emoncms to easily generate RFM12b data packets in the format that can be easily decoded with a struct definition on the listening control nodes. Emoncms would then broadcast this control packet containing all the state information about the system at a regular interval in the same way as an emontx broadcasts it's readings. Listening nodes can then be programmed to selectively use variables in that control packet depending on what they need to do.

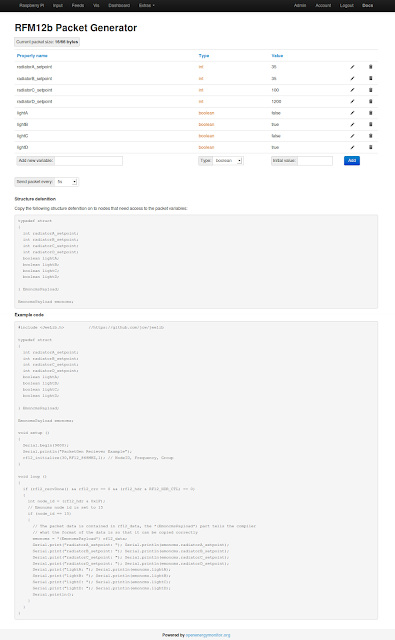

I've put together an initial working concept of a module that can be used to do this and its up on github here: https://github.com/emoncms/packetgen

Here's a screenshot of the main interface:

I visited John cantor of http://heatpumps.co.uk/ today who is implementing an impressive control and monitoring system for a heatpump central heating system which involves EmonGLCD's with relay's connected to radiator valve actuators in each room. The buttons on the EmonGLCD are used to set the target temperature for the room and information is displayed on the EmonGLCD about heatpump power input and outside temperature.

On the way back I stopped at the Centre for Alternative Technology where Marnoch who is on their student placement there is working on doing something similar but for a radiator system connected to a wood chip boiler.

Both Glyn and Ynyr are keen to get boiler thermostat controllers working in their houses and Ken Boak who designed the NanodeRF phoned up the other day suggesting we start an openenergymonitor sub project and discussion on this topic, Ken's been working on central heating control for quite some time.

Its something I have a growing interest in too as we will be soon installing a heatpump system at home which has a lot of opportunity for control and monitoring to make sure its running efficiently.

So I've been giving some thought to how control features could be added to emoncms in a more integrated way than initial efforts I've made before.

I like the simplicity of the way Jean Claude Wippler designed the jeelib library to use c structures as the method in which data is packaged and sent via the RFM12b radio's and so I thought that maybe a good basis for adding control functionality to emoncms would be to first make it possible for emoncms to easily generate RFM12b data packets in the format that can be easily decoded with a struct definition on the listening control nodes. Emoncms would then broadcast this control packet containing all the state information about the system at a regular interval in the same way as an emontx broadcasts it's readings. Listening nodes can then be programmed to selectively use variables in that control packet depending on what they need to do.

I've put together an initial working concept of a module that can be used to do this and its up on github here: https://github.com/emoncms/packetgen

Here's a screenshot of the main interface:

One of the nice things about it is that as you generate your control packet the module generates example Arduino code to make it easier to start coding a control node, you can just copy the code any drop it into the Arduino IDE and upload to a atmega+rfm12b based node and it should start receiving the control packet right away.

I see this module and control in general being run on a local installation of emoncms running on a raspberrypi + harddrive combination rather than emoncms.org. The next step is an image with all this installed and working out of the box.

2013 Christmas Ordering Info - Royal Mail Last Posting Dates for Delivery before Christmas

It's that time of year again! We're busier than ever with the launch of our new hardware modules this week.Thanks to everyone who's helped support the project by ordering through the shop or contributed to the project in some way shape or form. All pre-orders for the new hardware will have been shipped by beginning of next week.

Since I last posted a similar post this time last year we have shipped over 2500 orders and have moved into a new office.

Here are the last posting dates for delivery in time for Christmas according to Royal Mail. We will continue to ship right up until Christmas but to avoid disappointment if you would like your items before Christmas please get your order in soon!

International Airmail including

Airsure® and International Signed For™

Wednesday 4 December Asia, Far East (including Japan), New Zealand

Thursday 5 December Australia

Friday 6 December Africa, Caribbean, Central & South America, Middle East

Monday 9 December Cyprus, Eastern Europe

Tuesday 10 December Canada, France, Greece, Poland

Friday 13 December USA

Saturday 14 December Western Europe (excluding France, Greece, Poland)

UK Services

Friday 20 December 1st Class

Monday 23 December Royal Mail Special Delivery Guaranteed™

Airsure® and International Signed For™

Wednesday 4 December Asia, Far East (including Japan), New Zealand

Thursday 5 December Australia

Friday 6 December Africa, Caribbean, Central & South America, Middle East

Monday 9 December Cyprus, Eastern Europe

Tuesday 10 December Canada, France, Greece, Poland

Friday 13 December USA

Saturday 14 December Western Europe (excluding France, Greece, Poland)

UK Services

Friday 20 December 1st Class

Monday 23 December Royal Mail Special Delivery Guaranteed™

We will also be shipping between Christmas and new year and as soon as the postal system opens again beginning of Jan.

We will hopefully get another post up when I have a bit more time over the festive period taking stock of the year; what we've achieved and our aims and hopes for next year. Until then, here's a quick couple of photos from the office today:

|

| emonTH assembled PCB's tested, firmware uploaded and ready to go |

|

| emonTx V3 cases getting ready to ship..in case you're wondering, yes we did give that poor suffering plant in the background some water later today! |

| Trystan and the the dev bench |

| Myself and Trystan 'working', I should add that I'm doing 'Movember' , only a few more days to go, please donate, it's for a good cause |

| Printing postage for order parcels |

| Order fulfillment |

New hardware is in the shop and starting to ship this week

If you have been following the blog and twitter over the past couple of weeks you will probably have noticed that we've been gearing up for and starting manufacture (part 1 and part 2) of a trio of new hardware units:

The emonTx V3 is our new flagship energy monitoring wireless node. Thanks to community contributions and user feedback we've improved on the previous through-hole emonTx design while trying to keep as much flexibility and user customisation potential as possible.

Hardware

The unit uses the same Atmel ATmega328 Arduino compatible microprocessor and all free I/O pins have been made accessible on a screw-terminal block for easy expandability.

Apart from the obvious change in enclosure and move to SMT pre-assembled electronics, the most notable hardware improvement is the addition of an AC-DC circuit. This enables the emonTx V3 to be powered from a single AC-AC plug-in adapter while simultaneously providing an AC voltage sample for VRMS and Real Power measurements.

Software

Instead of having a separate firmware sketch example for each function as we had with the emonTx V2 (e.g. Real Power (CT123_voltage), Apparent Power (CT123), Temperature etc.) we have for the emonTx V3 made progress combining all these into one 'auto-adaptable' piece of firmware. We have called it emonTxV3_discrete_sampling, it's based on EmonLib but no longer relies on the internal bandgap of the Atmel chip for ADC measurements. This will increase ADC accuracy and reduce the need for additional calibration. The main features of the firmware at present are:

The name 'discrete sampling' was used to draw distinction between the EmonLib based discrete sampling approach and Continuous Sampling. A Continuous Sampling PLL example is included in the emonTx V3 examples, after some testing we hope to integrate this into the main firmware: the emonTx V3 could switch to a more accurate continuous sampling mode when powered from AC-AC adapter of 5V DC USB.

The emonTx V3 is now available from the OpenEnergyMonitor shop.

The emonTH is a new unit to replace the Low Power emonTx Temperature node (which was bit of a cludge!).

Working with community groups like the Manchester Carbon Coop who are involved in retro-fitting houses with insulation we realised the need for an easy to deploy, long lasting temperature and humidity monitoring node. Many emonTHs can be deployed thought a house or building to inform a building performance model, heating control system or just for general interest! The emonTH supports DHT22 and DS18B20 sensors as well as simultaneous indoor and outdoor temperature readings using a remote DS18B20 sensor wired into terminal block.

As with the emonTx the data is logged via a web-connected base station to emoncms server for logging, graphing and analysis. As with all our hardware, the emonTH is open-source and Arduino IDE compatible using the ATmega328 with the Arduino serial bootloader.

The emonTH is now available from the OpenEnergyMonitor shop.

Arduino compatible energy monitor shield add-on.

For prototyping and lab experiences an Arduino is a great tool to quickly try something. The emonTx Shield allows energy monitoring functions to be added to a standard Arduino Uno / Leonardo or Yun as well as a Nanode RF. As with all our hardware units we provide as many software examples to help you get up and running. The emonTx Shield SMT uses Surface Mount (SMT) pre-assembled electronics. To keep costs down the through-hole components (headers and connectors) require manual solder assembly.

The emonTx Shield SMT is now available from the OpenEnergyMonitor shop.

1. emonTx V3

The emonTx V3 is our new flagship energy monitoring wireless node. Thanks to community contributions and user feedback we've improved on the previous through-hole emonTx design while trying to keep as much flexibility and user customisation potential as possible.

|

| Full emonTx V3 system with 4 x CT-sensors an AC-AC power adapter: Real Power, Power Factor and VRMS measurements |

Hardware

The unit uses the same Atmel ATmega328 Arduino compatible microprocessor and all free I/O pins have been made accessible on a screw-terminal block for easy expandability.

Apart from the obvious change in enclosure and move to SMT pre-assembled electronics, the most notable hardware improvement is the addition of an AC-DC circuit. This enables the emonTx V3 to be powered from a single AC-AC plug-in adapter while simultaneously providing an AC voltage sample for VRMS and Real Power measurements.

| emonTx V3 with ATmega328, RFM12B and on-board 3 x AA batteries |

Software

Instead of having a separate firmware sketch example for each function as we had with the emonTx V2 (e.g. Real Power (CT123_voltage), Apparent Power (CT123), Temperature etc.) we have for the emonTx V3 made progress combining all these into one 'auto-adaptable' piece of firmware. We have called it emonTxV3_discrete_sampling, it's based on EmonLib but no longer relies on the internal bandgap of the Atmel chip for ADC measurements. This will increase ADC accuracy and reduce the need for additional calibration. The main features of the firmware at present are:

- CT detection – only sampling from required channels

- Power supply detection & presence of AC-AC adapter – VRMS and Real Power measurements are enabled if powering from AC-AC adapter and power saving mode is enabled of powering from batteries

- Temperature sensor detection – external DS18B20 temperature sensors are auto detected and temperature readings added to measurements

The name 'discrete sampling' was used to draw distinction between the EmonLib based discrete sampling approach and Continuous Sampling. A Continuous Sampling PLL example is included in the emonTx V3 examples, after some testing we hope to integrate this into the main firmware: the emonTx V3 could switch to a more accurate continuous sampling mode when powered from AC-AC adapter of 5V DC USB.

The emonTx V3 is now available from the OpenEnergyMonitor shop.

2. emonTH

Wireless Temperature and Humidity Node| emonTH Temperature and Humidity Node |

The emonTH is a new unit to replace the Low Power emonTx Temperature node (which was bit of a cludge!).

| emonTH installed in my house, I will be keeping a close eye on temperature and humidity in my house over the winter in North Wales |

Working with community groups like the Manchester Carbon Coop who are involved in retro-fitting houses with insulation we realised the need for an easy to deploy, long lasting temperature and humidity monitoring node. Many emonTHs can be deployed thought a house or building to inform a building performance model, heating control system or just for general interest! The emonTH supports DHT22 and DS18B20 sensors as well as simultaneous indoor and outdoor temperature readings using a remote DS18B20 sensor wired into terminal block.

As with the emonTx the data is logged via a web-connected base station to emoncms server for logging, graphing and analysis. As with all our hardware, the emonTH is open-source and Arduino IDE compatible using the ATmega328 with the Arduino serial bootloader.

| The emonTH comes pre assembled and has an on board DC-DC converter to increase battery life. |

The emonTH is now available from the OpenEnergyMonitor shop.

3. emonTx Arduino Shield SMT

Arduino compatible energy monitor shield add-on.

| emonTx Shied SMT on Arduino Leonardo |

For prototyping and lab experiences an Arduino is a great tool to quickly try something. The emonTx Shield allows energy monitoring functions to be added to a standard Arduino Uno / Leonardo or Yun as well as a Nanode RF. As with all our hardware units we provide as many software examples to help you get up and running. The emonTx Shield SMT uses Surface Mount (SMT) pre-assembled electronics. To keep costs down the through-hole components (headers and connectors) require manual solder assembly.

The emonTx Shield SMT is now available from the OpenEnergyMonitor shop.